MENUMENU

Featured Project

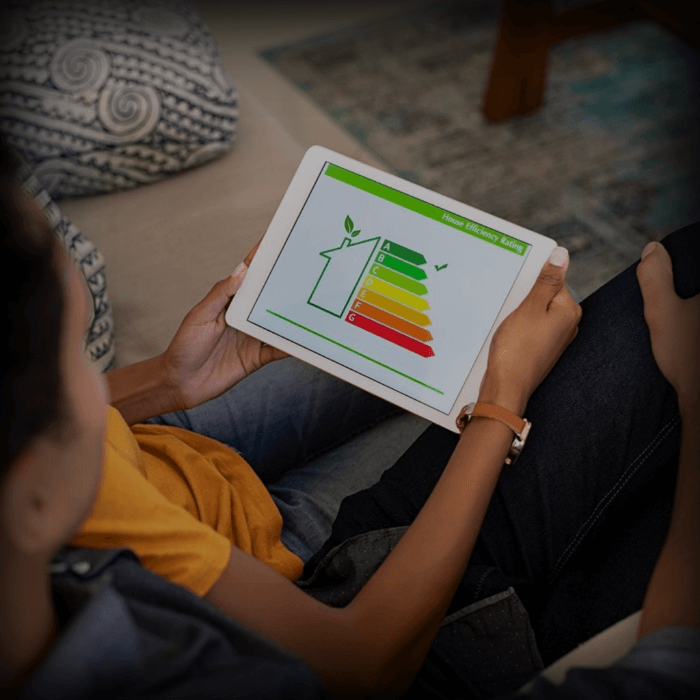

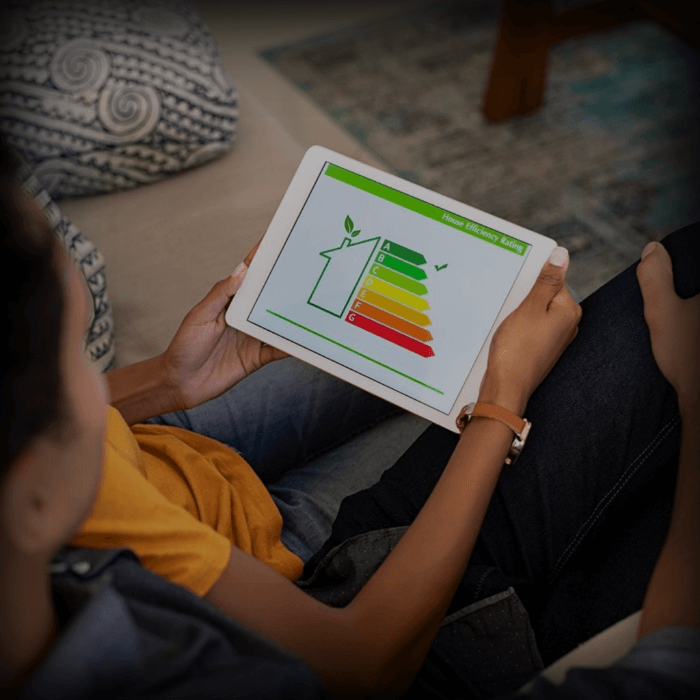

BKR Energy

Java

BKR Energy platform is an advanced hybrid system that allows you to maximize your energy savings, while contributing to a greener and more sustainable future.

BKR Energy platform is an advanced hybrid system that allows you to maximize your energy savings, while contributing to a greener and more sustainable future.

Kubernetes is open source, but not free Kubernetes is a foundational technology in modern DevOps. Its flexibility, scalability, and vendor-neutral open-source model have made it the industry standard for container orchestration. Organizations benefit from faster deployments, resilient systems, and improved resource efficiency. Yet despite these advantages, FinOps Managers and DevOps teams often struggle with unexpectedly […]

Kubernetes is a foundational technology in modern DevOps. Its flexibility, scalability, and vendor-neutral open-source model have made it the industry standard for container orchestration. Organizations benefit from faster deployments, resilient systems, and improved resource efficiency. Yet despite these advantages, FinOps Managers and DevOps teams often struggle with unexpectedly high cloud bills.

The perception that Kubernetes is “free” stems from its open-source nature. While there’s no licensing fee, running Kubernetes in production involves substantial infrastructure and operational costs. Teams migrating from simpler platforms like AWS ECS or Elastic Beanstalk often underestimate how much more expensive Kubernetes can be—and fail to plan accordingly.

This article explores the true cost of running Kubernetes in production. We’ll examine hidden expenses tied to control plane pricing, over-provisioned worker nodes, security and monitoring tooling, and the significant DevOps overhead required to manage it all. We’ll also share a real-world case study of an ECS-to-EKS migration that doubled costs—highlighting the price of complexity without proactive cost controls.

At Datawalls, we believe Kubernetes requires cost-aware design and ongoing financial visibility. Our experts help organizations implement proactive optimization strategies, embedded from the first design sprint and reinforced through regular FinOps reviews.

Open-source software often gives the impression of zero cost, but Kubernetes highlights why this assumption can be misleading. While the binaries and source code are free to download, the operational environment is not. Running Kubernetes clusters in production requires compute resources, storage, networking, observability tools, and continuous human expertise. Without careful oversight, these costs can quickly outweigh the value delivered.

Unlike simpler container platforms, Kubernetes introduces a steep learning curve. Every feature—namespaces, ingress controllers, persistent volumes, or service meshes—adds layers of configuration and maintenance. While these features empower scalability and resilience, they also require specialized DevOps expertise to manage effectively. The more complex the architecture becomes, the more costly it is to operate and secure.

One of the biggest mistakes organizations make is deploying Kubernetes without implementing cost monitoring from day one. Financial observability should be treated with the same importance as application observability. Tools like Kubecost, Prometheus, and cloud-native billing dashboards can provide visibility into which namespaces, teams, or services are driving costs. This data empowers engineering and finance teams to collaborate and make smarter architectural decisions.

A sustainable Kubernetes adoption strategy requires a balance between innovation and cost control. This means:

Kubernetes is powerful, but power comes with responsibility—and cost. Organizations that treat it as “just another open-source tool” risk bill shock and wasted resources. Those that succeed with Kubernetes are the ones that approach it with cost visibility, proactive optimization, and a commitment to aligning engineering decisions with financial outcomes.

At Datawalls, we help companies navigate this journey by building Kubernetes environments that are not only scalable and resilient but also financially sustainable.

👉 Ready to optimize your Kubernetes costs without sacrificing performance? Contact Datawalls today for a consultation.

Would you like me to also add a short bullet-point checklist at the end (e.g., “Top 5 quick wins for Kubernetes cost savings”) so readers leave with actionable takeaways?